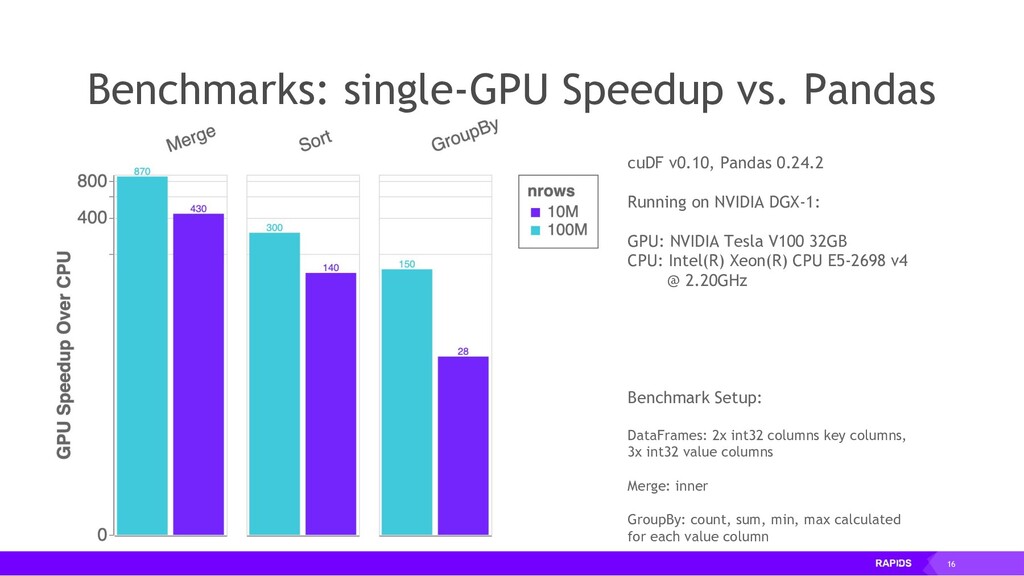

Speedup Python Pandas with RAPIDS GPU-Accelerated Dataframe Library called cuDF on Google Colab! - Bhavesh Bhatt

Speedup Python Pandas with RAPIDS GPU-Accelerated Dataframe Library called cuDF on Google Colab! - Bhavesh Bhatt

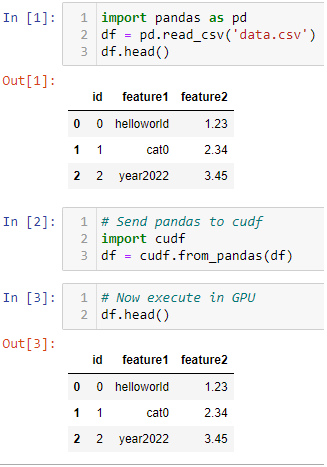

Gilberto Titericz Jr on Twitter: "Want to speedup Pandas DataFrame operations? Let me share one of my Kaggle tricks for fast experimentation. Just convert it to cudf and execute it in GPU

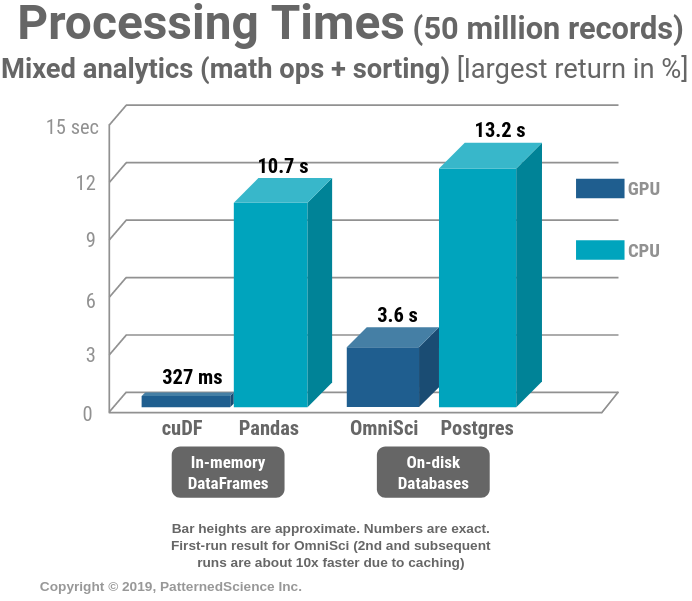

Talk/Demo: Supercharging Analytics with GPUs: OmniSci/cuDF vs Postgres/ Pandas/PDAL | Masood Khosroshahy (Krohy) — Senior Solution Architect (AI & Big Data)

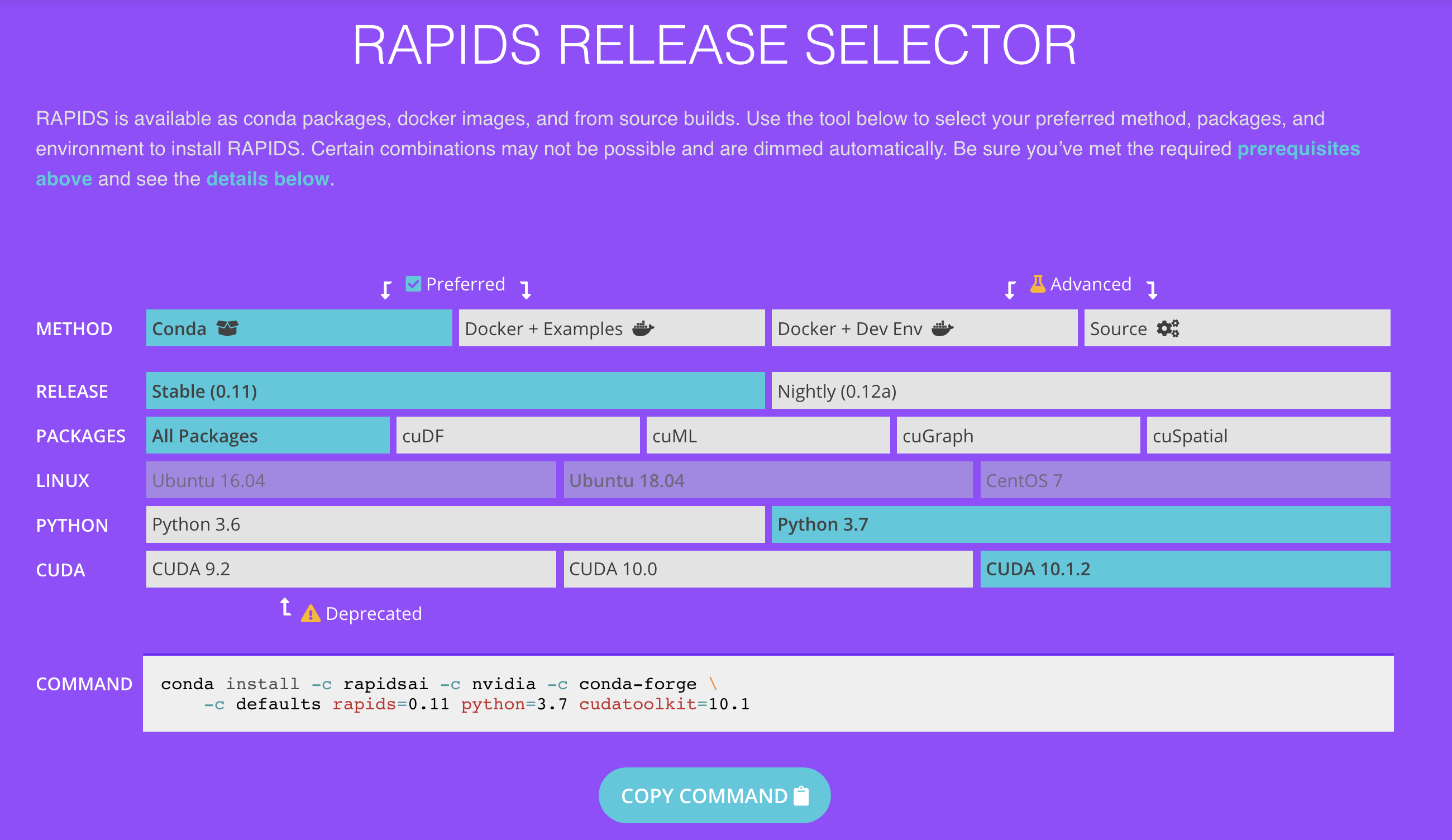

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog